Around the first of the year our contract manufacturer contacted us about an

urgent problem with HackRF One production. They'd

had to stop production because units coming off the line were failing at a high

rate. This was quite a surprise because HackRF One is a mature product that

has been manufactured regularly for a few years. I continued to find surprises

as I went through the process of troubleshooting the problem, and I thought it

made a fascinating tale that would be worth sharing.

The reported failure was an inability to write firmware to the flash memory

on the board. Our attention quickly turned to the flash chip itself because it

was the one thing that had changed since the previous production. The original

flash chip in the design had been discontinued, so we had selected

a replacement from the same manufacturer. Although we had been careful to test

the new chip prior to production, it seemed that somehow the change had

resulted in a high failure rate.

Had we overlooked a failure mode because we had tested too small a quantity

of the new flash chips? Had the sample parts we tested been different than the

parts used in the production? We quickly ordered parts from multiple sources

and had our contract manufacturer send us some of their parts and new boards

for testing. We began testing parts as soon as they arrived at our lab, but

even after days of testing samples from various sources we were unable to

reproduce the failures reported by the contract manufacturer.

At one point I thought I managed to reproduce the failure on one of the new

boards, but it only happened about 3% of the time. This failure happened

regardless of which flash chip was used, and it was easy to work around by

retrying. If it happened on the production line it probably wouldn't even be

noticed because it was indistinguishable from a simple user error such as a

poor cable connection or a missed button press. Eventually I determined that

this low probability failure mode was something that affected older boards as

well. It is something we might be able to

fix, but it is a low priority. It certainly wasn't the same failure mode

that had stopped production.

It seemed that the new flash chip caused no problems, but then what could be

causing the failures at the factory? We had them ship us more sample boards,

specifically requesting boards that had exhibited failures. They had intended

to send us those in the first shipment but accidentally left them out of the

package. Because the flash chip was so strongly suspected at the time, we'd

all thought that we'd be able to reproduce the failure with one or more of the

many chips in that package anyway. One thing that had made it difficult for

them to know which boards to ship was that any board that passed testing once

would never fail again. For this reason they had deemed it more important to

send us fresh, untested boards than boards that had failed and later

passed.

When the second batch of boards from the contract manufacturer arrived, we

immediately started testing them. We weren't able to reproduce the failure on

the first board in the shipment. We weren't able to reproduce the failure on

the second board either! Fortunately the next three boards exhibited the

failure, and we were finally able to observe the problem in our lab. I

isolated the failure to something that happened before the actual programming

of the flash, so I was able to develop a test procedure that left the flash

empty, avoiding the scenario in which a board that passed once would never fail

again. Even after being able to reliably reproduce the failure, it took

several days of troubleshooting to fully understand the problem. It was a

frustrating process at the time, but the root cause turned out to be quite an

interesting bug.

Although the initial symptom was a failure to program flash, the means of

programming flash on a new board is actually a multi-step

process. First the HackRF One is booted in Device Firmware Upgrade (DFU)

mode. This is done by holding down the DFU button while powering on or

resetting the board. In DFU mode, the HackRF's microcontroller executes a DFU

bootloader function stored in ROM. The host computer speaks to the bootloader

over USB and loads HackRF firmware into RAM. Then the bootloader executes this

firmware which appears as a new USB device to the host. Finally the host uses

a function of the firmware running in RAM to load another version of the

firmware over USB and onto the flash chip.

I found that the failure happened at the step in which the DFU bootloader

launches our firmware from RAM. The load of firmware over USB into RAM

appeared to work, but then the DFU bootloader dropped off the bus and the USB

host was unable to re-enumerate the device. I probed the board with a

voltmeter and oscilloscope, but nearly everything looked as expected. There

was a fairly significant voltage glitch on the microcontroller's power supply

(VCC), but a probe of a known good board from a previous production revealed a

similar glitch. I made a note of it as something to investigate in the future,

but it didn't seem to be anything new.

I connected a Black Magic Probe

and investigated the state of the microcontroller before and after the failure.

Before the failure, the program counter pointed to the ROM region that contains

the DFU bootloader. After the failure, the program counter still pointed to

the ROM region, suggesting that control may never have passed to the HackRF

firmware. I inspected RAM after the failure and found that our firmware was in

the correct place but that the first 16 bytes had been replaced by 0xff. It

made sense that the bootloader would not attempt to execute our code because it

is supposed to perform an integrity check over the first few bytes. Since

those bytes were corrupted, the bootloader should have refused to jump to our

code.

I monitored the USB communication to see if the firmware image was corrupted

before being delivered to the bootloader, but the first 16 bytes were correct

in transit. Nothing looked out of the ordinary on USB except that there was no

indication that the HackRF firmware had started up. After the bootloader

accepted the firmware image, it dropped off the bus, and then the bus was

silent.

As my testing progressed, I began to notice a curious thing, and our

contract manufacturer reported the very same observation: The RF LED on the

board sometimes was dimly illuminated in DFU mode and sometimes was completely

off. Whenever it was off, the failure would occur; whenever it was dimly on,

the board would pass testing. This inconsistency in the state of the RF LED is

something that we had observed for years. I had never given it much thought

but assumed it may have been caused by some known bugs in

reset functions of the microcontroller. Suddenly this behavior was very

interesting because it was strongly correlated with the new failure! What

causes the RF LED to sometimes be dimly on at boot time? What causes the new

failure? Could they be caused by the same thing?

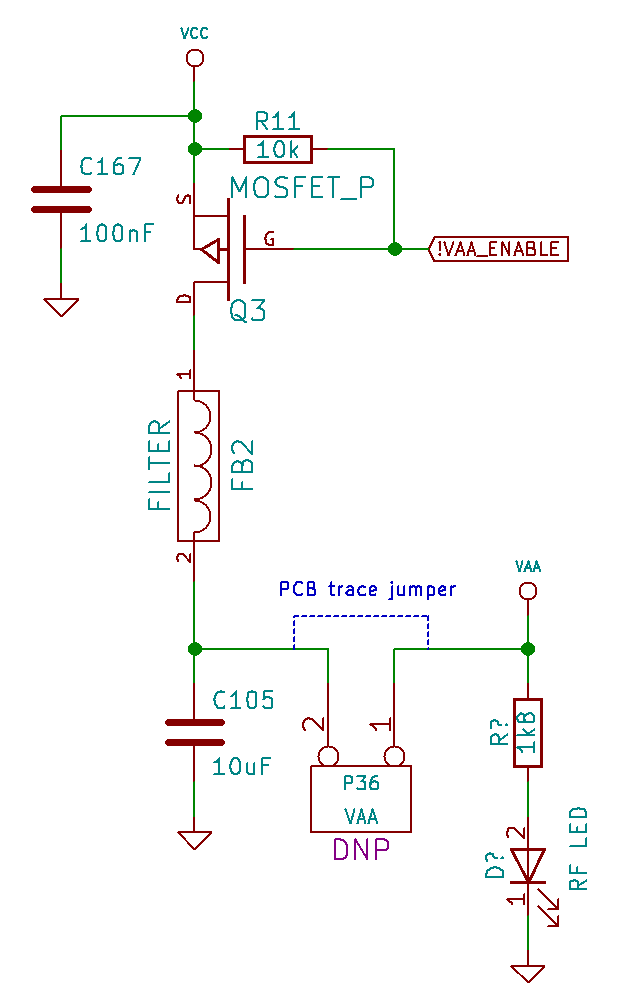

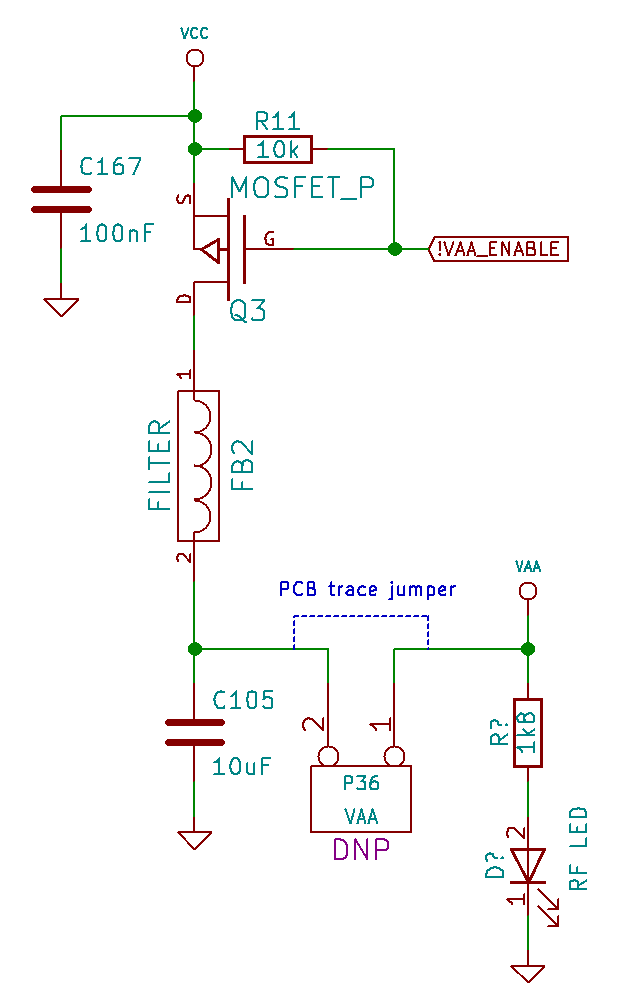

I took a look at the schematic

which reminded me that the RF LED is not connected to a General-Purpose

Input/Output (GPIO) pin of the microcontroller. Instead it directly indicates

the state of the power supply (VAA) for the RF section of the board. When VAA

is low (below about 1.5 Volts), the RF LED is off. When VAA is at or near 3.3

Volts (the same voltage as VCC), the RF LED should be fully on. If the RF LED

is dimly on, VAA must be at approximately 2 Volts, the forward voltage of the

LED. This isn't enough voltage to power the chips in the RF section, but it is

enough to dimly illuminate the LED.

VAA is derived from VCC but is controlled by a MOSFET which switches VAA on

and off. At boot time, the MOSFET should be switched off, but somehow some

current can leak into VAA. I wasn't sure if this leakage was due to the state

of the GPIO signal that controls the MOSFET (!VAA_ENABLE) or if it could be

from one of several digital control signals that extend from the VCC power

domain into the VAA power domain. I probed all of those signals on both a good

board and a failing board but didn't find any significant differences. It

wasn't clear why VAA was sometimes partially charged at start-up, and I

couldn't find any indication of what might be different between a good board

and a bad board.

One thing that was clear was that the RF LED was always dimly illuminated

immediately after a failure. If I reset a board into DFU mode using the reset

button after a failure, the RF LED would remain dimly lit, and the failure

would be avoided on the second attempt. If I reset a board into DFU mode by

removing and restoring power instead of using the reset button, the RF LED

state became unpredictable. The procedural workaround of retrying with the

reset button would have been sufficient to proceed with manufacturing except

that we were nervous about shipping boards that would give end users trouble

if they need to recover from a load of faulty firmware. It might be a

support nightmare to have units in the field that do not provide a reliable

means of restoring firmware. We certainly wanted to at least understand the

root cause of the problem before agreeing to ship units that would require

users to follow a procedural workaround.

Meanwhile I had removed a large number of components from one of the failing

boards. I had started this process after determining that the flash chip was

not causing the problem. In order to prove this without a doubt, I entirely

removed the flash chip from a failing board and was still able to reproduce the

failure. I had continued removing components that seemed unrelated to the

failure just to prove to myself that they were not involved. When

investigating the correlation with VAA, I tried removing the MOSFET (Q3) and

found that the failure did not occur when Q3 was absent! I also found that

removal of the ferrite filter (FB2) on VAA or the capacitor (C105) would

prevent the failure. Whenever any of these three components was removed, the

failure could be avoided. I tried cutting the trace (P36) that connects the

VAA MOSFET and filter to the rest of VAA. Even without any connection to the

load, I could prevent the failure by removing any of those three components and

induce the failure by restoring all three. Perhaps the charging of VAA was not

only correlated with the failure but was somehow the cause of the failure!

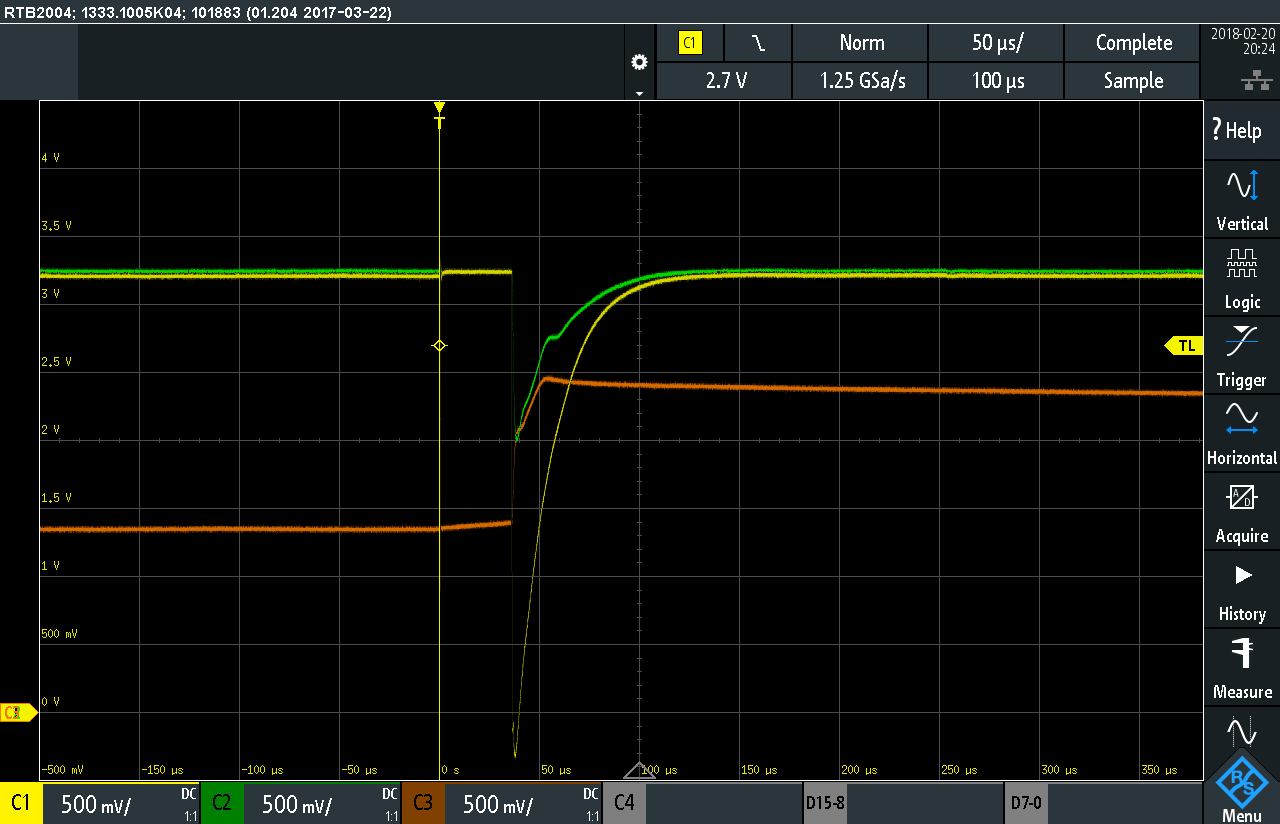

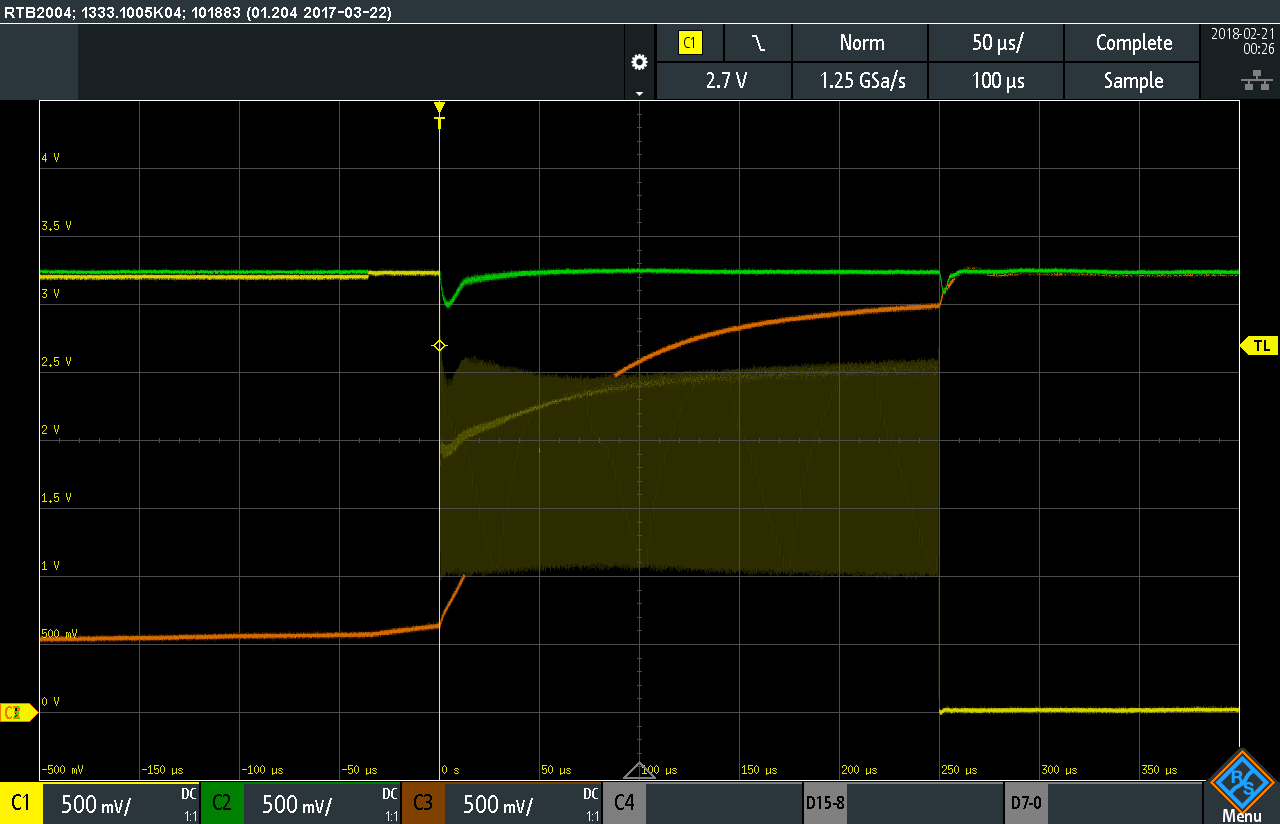

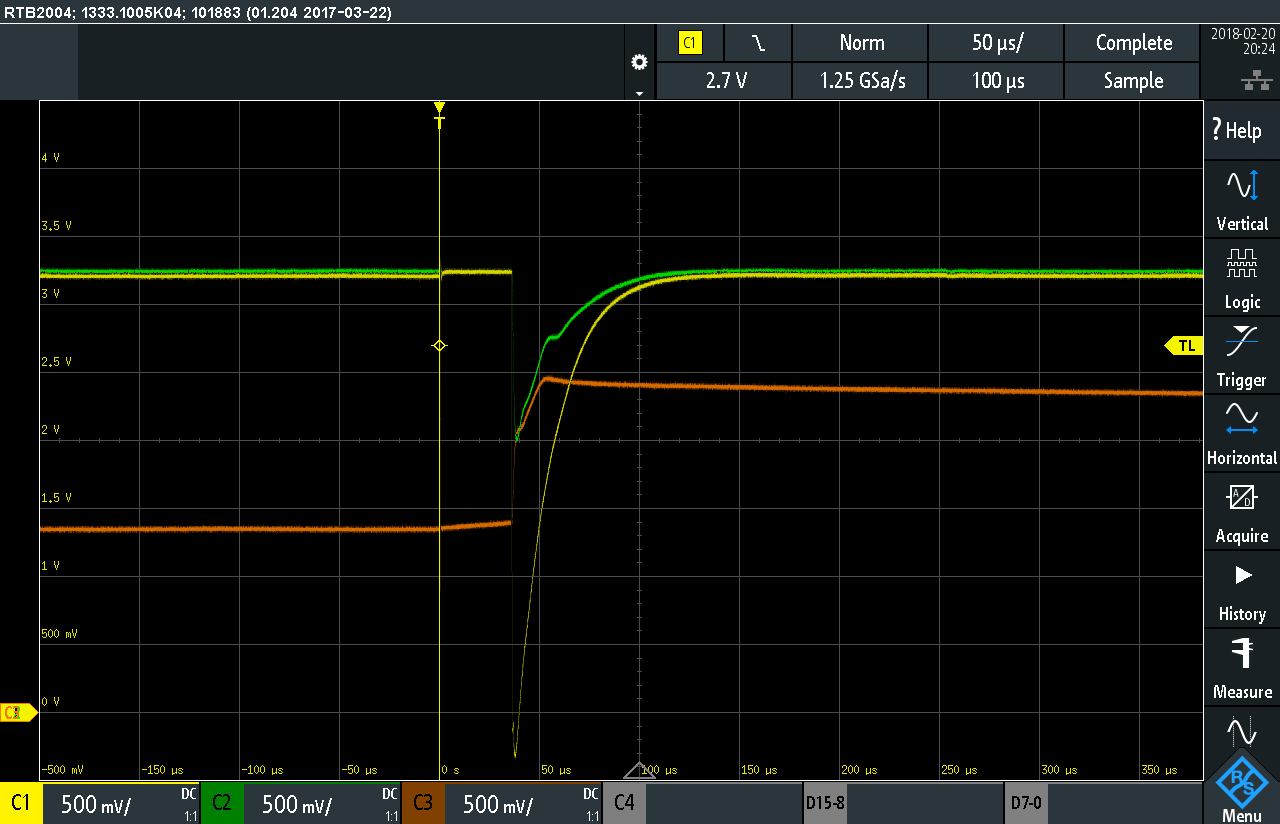

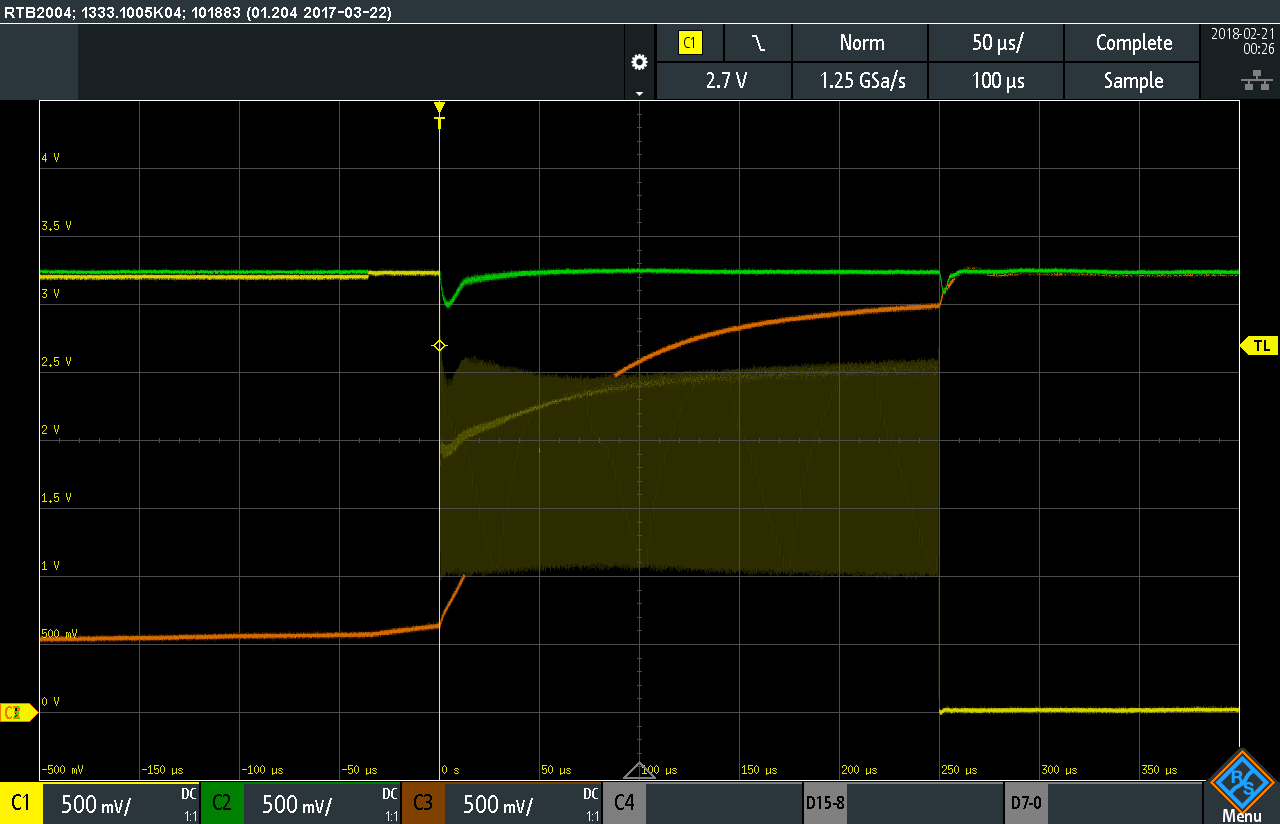

This prompted me to spend some time investigating VAA, VCC, and !VAA_ENABLE

more thoroughly. I wanted to fully understand why VAA was sometimes partially

charged and why the failure only happened when it was uncharged. I used an

oscilloscope to probe all three signals simultaneously, and I tried triggering

on changes to any of the three. Before long I found that triggering on

!VAA_ENABLE was most fruitful. It turned out that !VAA_ENABLE was being pulled

low very briefly at the approximate time of the failure. This signal was meant

to remain high until the HackRF firmware pulls it low to switch on VAA. Why

was the DFU bootloader toggling this pin before executing our firmware?

Had something changed in the DFU bootloader ROM? I used the Black Magic

Probe to dump the ROM from one of the new microcontrollers, but it was the same

as the ROM on older ones. I even swapped the microcontrollers of a good board

and a bad board; the bad board continued to fail even with a known good

microcontroller, and the good board never exhibited a problem with the new

microcontroller installed. I investigated the behavior of !VAA_ENABLE on a

good board and found that a similar glitch happened prior to the point in time

at which the HackRF firmware pulls it low. I didn't understand what was

different between a good board and a bad board, but it seemed that this

behavior of !VAA_ENABLE was somehow responsible for the failure.

The transient change in !VAA_ENABLE caused a small rise in VAA and a brief,

very small dip in VCC. It didn't look like this dip would be enough to cause a

problem on the microcontroller, but, on the assumption that it might, I

experimented with ways to avoid affecting VCC as much. I found that a reliable

hardware workaround was to install a 1 kΩ resistor between VAA and VCC.

This caused VAA to always be partially charged prior to !VAA_ENABLE being

toggled, and it prevented the failure. It wasn't a very attractive workaround

because there isn't a good place to install the resistor without changing the

layout of the board, but we were able to confirm that it was effective on all

boards that suffered from the failure.

Trying to determine why the DFU bootloader might toggle !VAA_ENABLE, I

looked at the documented functions available on the microcontroller's pin that

is used for that signal. Its default function is GPIO, but it has a secondary

function as a part of an external memory interface. Was it possible that the

DFU bootloader was activating the external memory interface when writing the

firmware to internal RAM? Had I made a terrible error when I selected that pin

years ago, unaware of this bootloader behavior?

Unfortunately the DFU bootloader is a ROM function provided by the

microcontroller vendor, so we don't have source code for it. I did some

cursory reverse engineering of the ROM but couldn't find any indication that it

possesses the capability of activating the external memory interface. I tried

using the Black Magic Probe to single step through instructions, but it wasn't

fast enough to avoid USB timeouts while single stepping. I set a watchpoint on

a register that should be set when powering up the external memory interface,

but it never seemed to happen. Then I tried setting a watchpoint on the

register that sets the pin function, and suddenly something very surprising was

revealed to me. The first time the pin function was set was in my own code

executing from RAM. The bootloader was actually executing my firmware even

when the failure occurred!

After a brief moment of disbelief I realized what was going on. The reason

I had thought that my firmware never ran was that the program counter pointed

to ROM both before and after the failure, but that wasn't because my code never

executed. A ROM function was running after the failure because the

microcontroller was being reset during the failure. The failure was occurring

during execution of my own code and was likely something I could fix in

software! Part of the reason I had misinterpreted this behavior was that I had

been thinking about the bootloader as "the DFU bootloader", but it is

actually a unified bootloader that supports several different boot methods.

Even when booting to flash memory, the default boot option for HackRF One, the

first code executed by the microcontroller is the bootloader in ROM which later

passes control to the firmware in flash. You don't hold down the DFU button to

cause the bootloader to execute, you hold down the button to instruct the

bootloader to load code from USB DFU instead of flash.

Suddenly I understood that the memory corruption was something that happened

as an effect of the failure; it wasn't part of the cause. I also understood

why the failure did not seem to occur after a board passed testing once.

During the test, firmware is written to flash. If the failure occurs at any

time thereafter, the microcontroller resets and boots from flash, behaving

similarly to how it would behave if it had correctly executed code that had

been loaded via USB into RAM. The reason the board was stuck in a ROM function

after a failure on a board with empty flash was simply that the bootloader was

unable to detect valid firmware in flash after reset.

It seemed clear that the microcontroller must be experiencing a reset due to

a voltage glitch on VCC, but the glitch that I had observed on failing boards

seemed too small to have caused a reset. When I realized this, I took some

more measurements of VCC and zoomed out to a wider view on the oscilloscope.

There was a second glitch! The second glitch in VCC was much bigger than the

first. It was also caused by !VAA_ENABLE being pulled low, but this time it

was held low long enough to have a much larger effect on VCC. In fact, this

was the same glitch that I had previously observed on known good boards. I

then determined that the first glitch was caused by a minor

bug in the way our firmware configured the GPIO pin. The second glitch was

caused by the deliberate activation of !VAA_ENABLE.

When a good board starts up, it pulls !VAA_ENABLE low to activate the MOSFET

that switches on VAA. At this time, quite a bit of current gets dumped into

the capacitor (C105) in a short amount of time. This is a perfect recipe for

causing a brief drop in VCC. I knew about this potential problem when I

designed the circuit, but I guess I didn't carefully measure it at the time.

It never seemed to cause a problem on my prototypes.

When a bad board starts up, the exact same thing happens except the voltage

drop of VCC is just a little bit deeper. This causes a microcontroller reset,

resulting in !VAA_ENABLE being pulled high again. During this brief glitch VAA

becomes partially charged, which is why the RF LED is dimly lit after a

failure. If VAA is partially charged before !VAA_ENABLE is pulled low, less

current is required to fully charge it, so the voltage glitch on VCC isn't deep

enough to cause a reset.

At this point I figured out that the reason the state of the RF LED is

unpredictable after power is applied is that it depends on how long power has

been removed from the board. If you unplug a board with VAA at least partially

charged but then plug it back in within two seconds, VAA will still be

partially charged. If you leave it disconnected from power for at least five

seconds, VAA will be thoroughly discharged and the RF LED will be off after

plugging it back in.

This sort of voltage glitch is something hardware hackers introduce at times

as a fault

injection attack to cause microcontrollers to misbehave in useful ways. In

this case, my microcontroller was glitching itself, which was not a good thing!

Fortunately I was able to fix the problem by rapidly

toggling !VAA_ENABLE many times, causing VAA to charge more slowly and

avoiding the VCC glitch.

I'm still not entirely sure why boards from the new production seem to be

more sensitive to this failure than older boards, but I have a guess. My guess

is that a certain percentage of units have always suffered from this problem

but that they have gone undetected. The people programming the boards in

previous productions may have figured out on their own that they could save

time by using the reset button instead of unplugging a board and plugging it

back in to try again. If they did so, they would have had a very high success

rate on second attempts even when programming failed the first time. If a new

employee or two were doing the programming this time, they may have followed

their instructions more carefully, removing failing boards from power before

re-testing them.

Even if my guess is wrong, it seems that my design was always very close to

having this problem. Known good boards suffered from less of a glitch, but

they still experienced a glitch that was close to the threshold that would

cause a reset. It is entirely possible that subtle changes in the

characteristics of capacitors or other components on the board could cause this

glitch to be greater or smaller from one batch to the next.

Once a HackRF One has had its flash programmed, the problem is very likely

to go undetected forever. It turns out that this glitch can happen even when a

board is booted from flash, not just when starting it up in DFU mode. When

starting from flash, however, a glitch-induced reset results in another boot

from flash, this time with VAA charged up a little bit more. After one or two

resets that happen in the blink of an eye, it starts up normally without a

glitch. Unless you know what to look for, it is quite unlikely that you would

ever detect the fault.

Because of this and the fact that we didn't have a way to distinguish between

firmware running from flash and RAM, the failure was difficult for us to

reproduce and observe reliably before we understood it. Another thing that

complicated troubleshooting was that I was very focused on looking for

something that had changed since the previous production. It turned out that

the voltage glitch was only subtly worse than it was on the older boards I

tested, so I overlooked it as a possible cause. I don't know that it was

necessarily wrong to have this focus, but I might have found the root cause

faster had I concentrated more on understanding the problem and less on trying

to find things that had changed.

In the end I found that it was my own hardware design that caused the

problem. It was another example of something Jared Boone often says. I call it

ShareBrained's Razor: "If your project is broken, it is probably your

fault.". It isn't your compiler or your components or your tools; it is

something you did yourself.

Thank you to everyone who helped with this troubleshooting process,

especially the entire GSG team, Etonnet, and Kate Temkin. Also thank you to the pioneers of

antibiotics without which I would have had a significantly more difficult

recovery from the bronchitis that afflicted me during this effort!